Psych vs. Tech Conferences Head-to-Head 13 Aug 2024 2:13 PM (7 months ago)

Attending APA and a tech conference (posit::conf) back to back in the same city and almost the same facilities really highlights what you get for your registration money!

At full rate, APA is priced moderately for a general professional conference: this year, $515 for three days of content. SIOP in contrast is priced a bit aggressively, at $455. People often complain about what you get for what seems like a big price tag, which essentially boils down to “access to presentations” and “food/coffee at breaks.” Coffee itself is surprisingly expensive – between $125-$450 per coffee urn, which serves abi it $50 people, so roughly $2-$9 per person for what is quite bad coffee.

So what happens if you pay more? Well that’s what tech conferences do. Posit is the company behind RStudio, which is a public benefit corporation. They have a public good mission on top of a profit motive. So they try to price their conference aggressively for that audience. What does “aggressively priced” mean in tech?

General registration is $1100. Workshops are around $600 each. And the conference is 2 days with 1-4 tracks of content depending on time of day, in contrast to SIOP with 3 days and ~22 tracks. Many tech conferences are double or triple that fee. Microsoft for example charges almost $2000 for its “community conference,” although this is a 3-day event.

What do you get for it? At posit::conf, lunch is provided – a very good salmon today with opera cakes for dessert. There are many “free” receptions daily with open bars. Hosted, carefully designed events run every evening. During the lunch hour, there are barista stations offering “free” espresso based drinks. Staff are absolutely everywhere, all ready to help. And 100% of sessions – although this is only max 4 rooms at a time – are broadcast live for a virtual audience.

It is a difficult balance from a planning perspective. Tech conferences can use this model because they know many tech companies won’t sneeze at a $2000 registration fee if content is aligned with the work they’re doing. But in IO, this would price a lot of people out, especially students. Even here, which I will reiterate is cheap for a tech conference, the student registration fee is around $600, triple the SIOP student rate. So that means SIOP conference planners make tradeoffs in the quality of experience to make the conference accessible to a broad, diverse audience of IOs.

What balance would you prefer at SIOP? Do you like the balance that’s been struck so far?

The post Psych vs. Tech Conferences Head-to-Head appeared first on NeoAcademic.

Related articles from NeoAcademic:

Mr. Beast Discovers HR 13 Aug 2024 2:06 PM (7 months ago)

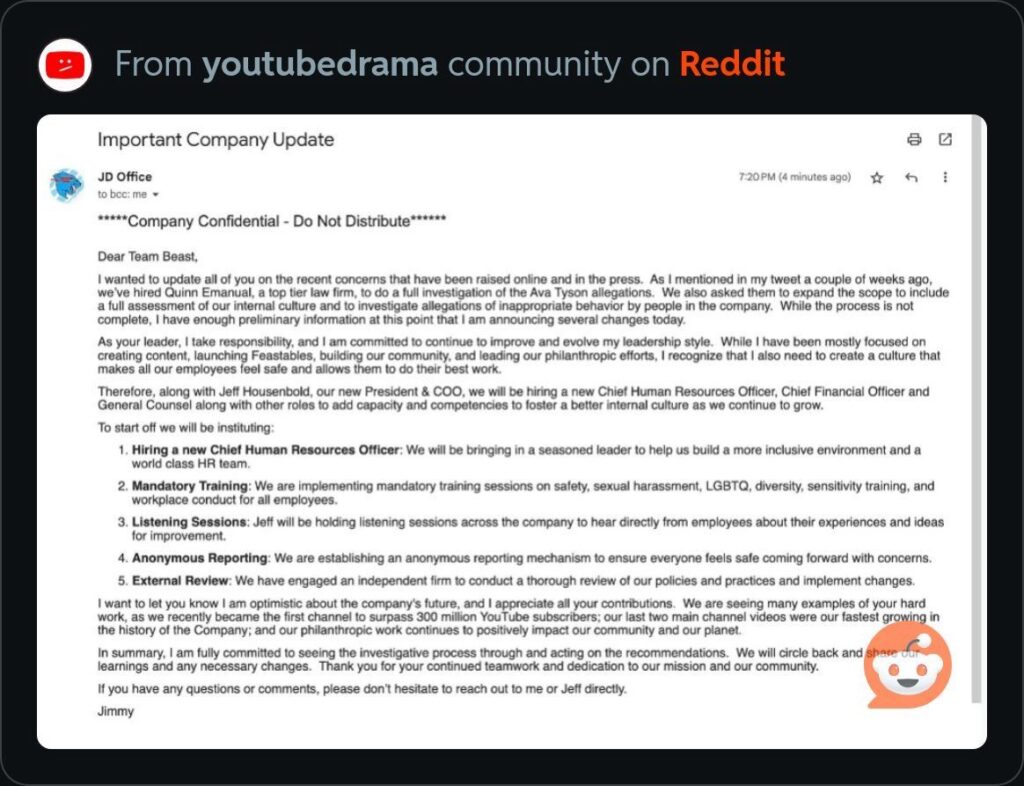

Experienced consultants will not find this story surprising: Mr. Beast, a company with over $700 million in revenue, is embroiled in a harassment scandal and is trying to address it by hiring their FIRST head of HR.

As I often seen with my grad students, a lot of people just getting into the business world assume “surely these big companies know what they’re doing” but you quickly discover that almost all corporations are reactive – someone had a good idea once upon a time that made some money, and ever since then, they’ve been in an unending scramble to keep the company alive and out of trouble.

In this leaked email from Mr Beast himself, you can see related brilliant insights like “maybe we should train people” and “maybe independent outside experts could help.” I do appreciate that Mr Beast didn’t start his YouTube channel to become a manager, but founder responsibilities change a lot when you start to make millions.

On the bright side, this sort of practice keeps my AI consultancy in business, as AI companies trying to work in talent management keep discovering that there are laws about what they’re doing or get sales-blocked by internal IOs asking for tech reports or at least “evidence,” and suddenly they need some expert advice or risk losing massive customer accounts.

But geez… even so, I do wish for a little more proactivity within these companies, which could reduce the pure chaos that this creates for the people working there, just trying to make a living.

The post Mr. Beast Discovers HR appeared first on NeoAcademic.

Related articles from NeoAcademic:

How to Survive the AI Revolution in HR 13 Aug 2024 2:02 PM (7 months ago)

I’m excited to announce the release of my action-oriented SIOP white paper coauthored with Sara Gutierrez, “How to Survive the AI Revolution in HR: Culture Change and Immediate Action.”

In this guide, Sara and I explore current and emerging AI technologies at their intersection with HR and I-O psychology. We discuss critical concerns and offer practical recommendations to help HR professionals navigate the rapidly evolving landscape.

Our white paper covers:

• A summary of the current state of AI in HR, highlighting 8 key concerns.

• An overview of 7 HR domains where AI is likely to trigger significant changes, with multiple examples.

• 5 actionable recommendations to ensure HR and I-O professionals stay ahead.

The full paper (freely available via the link) gives the details, but the key recommendations are:

1) Constant upskilling: HR needs to put an effort into constantly upskilling decision-makers about AI. A little training isn’t going to cut it anymore. You need your decision-makers to develop AI fluency.

2) Cross-functional collaboration: HR needs to collaborate across functional groups to manage AI initiatives. Calling up a vendor, buying a product, and hoping for the best is not going to get you what you want out of AI. You need internal subject matter experts.

3) Change management: HR needs to engage in effective change management. This is not historically a strong area for HR! We characterize this as a culture shift. HR needs to learn to ADAPT.

4) Embrace AI’s potential as a neutral collaborator: HR needs to understand that AI is morally neutral. It can be used for good or evil. More worrying, it’s very easy to unintentionally contribute to evil! HR needs to listen to employees, to understand how they are experimenting with AI, and LISTEN to them in pursuit of meaningful change.

5) Ethical AI: HR needs to actively pursue ethical use of AI. There are no easy answers. There are hundreds of “AI ethics frameworks” out there, and most likely only a few of them might be a good fit for your organization. You need to work out your own values and STICK TO THEM. And most importantly, do not immediately choose the “remove all humans from this process” option, no matter what kind of cost savings it seems to promise!

If HR and I-O professionals do not take more of a lead, the push will be made by process optimization engineers, who often prioritize short-term profits over long-term organizational health. As front-line defenders of both organizational success and employee well-being, we’re the front line! Don’t let it happen! The Society for Industrial and Organizational Psychology (SIOP) could be humanity’s last line of defense (at least in the workplace)!!Ac

The post How to Survive the AI Revolution in HR appeared first on NeoAcademic.

Related articles from NeoAcademic:

How to Reduce Student Entitlement 13 Aug 2024 1:58 PM (7 months ago)

Dealing with student entitlement is one of the toughest challenges in modern education. Students often expect good outcomes (“I deserved an A!”) regardless of their effort, and I’m very impressed with a new, data-backed intervention to combat this!

This compelling research article by Stephanie Freis, PhD offers a simple yet effective solution to reduce student entitlement. This intervention not only boosts student ratings of teaching, class experience, and grading fairness but also decreases negative reactions to critical feedback. It shifts the locus of control from professors to students, fostering a more responsible and engaged learning environment.

The full intervention, shared openly in the Word doc linked below, can be administered online. It guides students through exercises to set implementation intentions, using materials like gender-matched testimonials and real examples of behaviors contributing to entitlement, all created by college students themselves. This combination of personal relevance and goal-setting appears to make it particularly effective.

This study’s rigorous design, including a pre-post design with a control group, and presentation, including open materials for replication and practical application, make it a great model for excellent applied research, regardless of field.

Check out the full research article and also read this Word doc with the full intervention materials starting on page 5.

The post How to Reduce Student Entitlement appeared first on NeoAcademic.

Related articles from NeoAcademic:

ChatGPT Can Write Undergrad-Level Essays with Accurate Citations 20 Mar 2023 1:28 PM (2 years ago)

I was asked recently if ChatGPT with GPT-4 running underneath has become capable of writing an essay at a level that would fool and instructor and earn a high grade. “That’s an empirical question” as they say, but my N=1 study shows the answer is yes. At least in psychology. Here’s an example.

My goal was to get ChatGPT to produce a five-paragraph essay about the Myers-Briggs Type Indicator, a notoriously controversial personality test often the center of discussions about test validity. I did a small bit of prompt engineering, but not extensively.

For an undergraduate essay in an introduction to psychology class, I think what ChatGPT produced is an “A” paper. For a class on personality specifically… maybe. It’s a bit light on details (e.g., it appears as if the conclusions/abstracts of each paper are taken at face value), and it’s not very creative. But I’d still say it’s an B+/A- sort of paper even then.

This is definitely a noticeable jump in capability from GPT-3.5, which would hallucinate citations. Specifically, it would produce citations with authors that appeared relevant (e.g., my MBTI request would likely produce last names of authors that had written on MBTI topics) but were not real (e.g., the article titles would be fictional, and the authors would be a weird mashup of people’s names and initials). That no longer seems to be the case – these citations appear to be generally correct, on-topic, and described accurately in the text.

More precisely, they at least appear “close enough” to reasonable to someone who isn’t a world class expert on the MBTI, i.e., most psychology instructors. There are a couple of red flags, ironically highlighted by ChatGPT itself – two citations lack DOIs. One of those (Carskadon & Cook, 1982) when looking at Google Scholar appears to be from a now-defunct journal created specifically to promote the MBTI and which has changed names over the years. The other (Myers et al., 1998) is the MBTI manual itself. So these are not precisely trustworthy citations in a discussion of MBTI pros and cons. But that is also not a level of citation investigation and critical thinking that I would expect out of undergrad intro students. Frankly, I don’t imagine the average intro psych instructor would bother to examine them at that level of detail either.

Here are the prompts I used:

- Can you provide citations suggesting the MBTI is a high quality personality inventory?

- I heard that the MBTI is a poor quality personality inventory. What are some major academic citations to support this?

- Write a paragraph explaining the pros and cons using the MBTI as a personality inventory, using the citations above.

- Write a five-paragraph essay on this same topic.

And here is the result:

Title: The Myers-Briggs Type Indicator: A Balanced Assessment of Its Pros and Cons

Introduction:

The Myers-Briggs Type Indicator (MBTI) is a widely known and utilized personality inventory based on Carl Jung’s theory of psychological types. Despite its popularity in various contexts, such as career counseling, team building, and personal development, the MBTI has received mixed reviews from researchers and psychologists. This essay aims to provide a balanced assessment of the pros and cons of using the MBTI as a personality inventory, drawing from the cited academic sources.

Paragraph 1 – Pros of MBTI:

The MBTI has been praised for its ability to provide valuable insights into individuals’ preferences and behaviors (Myers et al., 1998). Some studies have supported the construct validity of the MBTI, suggesting that it can effectively measure the intended psychological constructs (Thompson & Borrello, 1986). Furthermore, the MBTI has been shown to be useful in certain applications, such as increasing self-awareness and facilitating interpersonal communication (Carskadon & Cook, 1982). In these contexts, the MBTI can serve as a valuable tool for personal growth and team development.

Paragraph 2 – Cons of MBTI:

Despite its widespread use and popularity, the MBTI has faced significant criticisms regarding its scientific rigor and psychometric properties. Critics argue that the MBTI lacks reliability, meaning that the results may not be consistent over time (Pittenger, 1993; Boyle, 1995). Additionally, its validity has been questioned, as some researchers have found that the MBTI may not accurately measure personality traits when compared to other personality assessments like the Big Five personality traits or the NEO Personality Inventory (McCrae & Costa, 1989; Furnham, 1996). These limitations cast doubt on the MBTI’s usefulness in research or clinical settings.

Paragraph 3 – Context is Key:

While the MBTI has its shortcomings, it is essential to recognize the importance of context when assessing its utility. In less formal settings, such as career counseling or team building, the MBTI may provide valuable insights and serve as a starting point for conversations about individual differences (Myers et al., 1998). However, in more rigorous research or clinical applications, where reliability and validity are crucial, alternative personality assessments with stronger empirical support may be more appropriate (McCrae & Costa, 1989; Furnham, 1996).

Conclusion:

The Myers-Briggs Type Indicator, while popular and valuable in certain contexts, has its share of pros and cons. Its usefulness in promoting self-awareness and facilitating communication is undeniable, but its limitations in terms of reliability and validity cannot be ignored. It is essential to approach the MBTI with a balanced understanding of its strengths and weaknesses, considering its appropriateness for a given situation and exploring more empirically supported alternatives when necessary.

References

Boyle, G. J. (1995). Myers-Briggs Type Indicator (MBTI): Some psychometric limitations. Australian Psychologist, 30(1), 71-74.

Link: https://doi.org/10.1080/00050069508259607

Carskadon, T. G., & Cook, D. A. (1982). Validity of MBTI descriptions as perceived by recipients unfamiliar with type. Research in Psychological Type, 5, 89-94.

Furnham, A. (1996). The big five versus the big four: The relationship between the Myers-Briggs Type Indicator (MBTI) and NEO-PI five factor model of personality. Personality and Individual Differences, 21(2), 303-307.

Link: https://doi.org/10.1016/0191-8869(96)00047-0

McCrae, R. R., & Costa, P. T. (1989). Reinterpreting the Myers-Briggs Type Indicator from the perspective of the five-factor model of personality. Journal of Personality, 57(1), 17-40.

Link: https://doi.org/10.1111/j.1467-6494.1989.tb00759.x

Myers, I. B., McCaulley, M. H., Quenk, N. L., & Hammer, A. L. (1998). MBTI manual: A guide to the development and use of the Myers-Briggs Type Indicator (3rd ed.). Palo Alto, CA: Consulting Psychologists Press.

Pittenger, D. J. (1993). The utility of the Myers-Briggs type indicator. Review of Educational Research, 63(4), 467-488.

Link: https://doi.org/10.3102/00346543063004467

Thompson, B., & Borrello, G. M. (1986). Construct validity of the Myers-Briggs Type Indicator. Educational and Psychological Measurement, 46(3), 745-752.

Link: https://doi.org/10.1177/0013164486463032

The post ChatGPT Can Write Undergrad-Level Essays with Accurate Citations appeared first on NeoAcademic.

Related articles from NeoAcademic:

- Grad School: How Do I Write a Personal Statement?

- Don’t Use Abbreviated Personality Measures

- How to Write a Publishable Social Scientific Research Article: Exploring Your “Process”

- How to Stream Live PowerPoint Lectures + Webcam to YouTube

- How to Write an APA Style Research Paper Introduction [INFOGRAPHIC]

Counts and Demographics of IO Psychology Doctoral Degrees 5 Dec 2022 1:34 PM (2 years ago)

Have you ever wondered about the demographics of IO Psychology PhDs? The National Science Foundation recently released its report from the 2021 Survey of Earned Doctorates, which summarizes trends in doctoral education across US universities. There are a few interesting tidbits about the IO psychology degrees awarded back in 2021:

- IO psychology PhD programs awarded 181 research doctorates in 2021, which accounts for 4.9% of all doctoral degrees in psychology and 0.3% of all doctoral degrees awarded.

- 64.6% of IO psychology research doctorates were awarded to women (versus 73.0% for psychology in general).

- 11.0% of IO psychology research doctorates were awarded to international students (versus 8.3% for psychology in general).

- The racial breakdown of American IO PhD psychology graduates, compared to psychology in general, was:

- 11.4% Hispanic or Latino (vs. 11.7% for psych in general)

- 0.0% American Indian or Alaskan Native (vs. 0.3%)

- 9.4% Asian (vs. 6.4%)

- 8.7% Black or African American, not Hispanic (vs. 7.9%)

- 65.1% White, not Hispanic (vs. 66.2%)

- 4.0% More than one race (vs. 4.1%)

So overall, it looks like the profile of awarded IO psychology doctoral degrees looks very similar to the that of the field in general. The exception appears to be gender balance, for which IO is closer to gender parity than almost all other subfields of psychology (although still 15% away from equal odds).

You can find the source for all of these values, and a huge amount of additional data not specific to IO psychology, by looking at the tables generated from survey data. Most of these numbers came from Tables 3-1 through 3-3.

I’d be very curious to see how these numbers change following people into career paths but could not find any relevant data. If you know of any, please let me know!

The post Counts and Demographics of IO Psychology Doctoral Degrees appeared first on NeoAcademic.

Related articles from NeoAcademic:

A New List of I-O Psychology Podcasts 16 Apr 2021 12:23 PM (3 years ago)

We’re currently in the midst of the Society for Industrial and Organizational Psychology’s annual conference, which as appropriate to a pandemic, is mostly via Zoom. Over three thousand people are participating.

Another side effect of the pandemic, one that I did not expect but realized because of the online conference, is that there has been an explosion in I-O psychology podcasts. I also realized 1) there is no centralized record keeping for I-O podcasts and 2) I-O podcasts are hard to search for because most don’t use the words “industrial-organizational” or “I-O” because you obviously don’t want to scare away your audience.

Classifying podcasts as “I-O podcasts” is a trickier thing than you might imagine. Although anything about “workplace behavior” is potentially I-O-relevant, my goal here is to present podcasts hosted by people who identify to the world at large as I-Os, especially in their podcasts. That’s a much smaller list.

With all that in mind, here’s a list of the I-O podcasts I’m currently aware of that have had a new episode within the last 60 days, along with some short commentary for the first few followed by descriptions (happy to add yours if you leave a comment!).

| The Great I-O Get-together with Richard Landers and Tara Behrend | This first one is obviously the best, and it’s obviously the best because Tara is involved. Available as both a YouTube Live variety show and as a podcast for those without the time to watch live YouTube variety shows, our goal is to bring the I-O psychology community together, whether you’re an academic, practitioner, student, or all three at the same time. We usually play a game, like trivia or would-you-rather, in the first half, and have interviews in the second half. We also have a Discord community where you can connect with your fellow I-Os. And just to be clear, this is a show for I-O, not a show about I-O. The rest of these will be alphabetical.  |

| Bias Check-In with Susana Gomez Ornelas and Claudia Della Pona | “What biases do we carry with ourselves in our daily lives, classrooms, and careers? A podcast hosted by IO psychologists and international students Susana Gómez Ornelas & Claudia Della Pona.” |

| The Business of Behavior with Becca Tagg | “Getting clear about our values will help us to make changes in our business that lead to greater fulfillment, productivity, and abundance.” |

| Coaching for Leaders with Dave Stachowiak | “Leaders aren’t born, they’re made. This Monday show helps you discover leadership wisdom through insightful conversations. Independently produced weekly since 2011, Dr. Dave Stachowiak brings perspective from a thriving, global leadership academy, plus more than 15 years of leadership at Dale Carnegie.” |

| Department 12 with Ben Butina | One of the longest-running I-O podcasts, this is “a podcast for industrial-organizational psychologists, researchers, practitioners, and students. We cover anything and everything related to the research and practice of IO psych.” |

| Healthy Work with Keaton Fletcher and Maryana Arvan | Keaton may need to work on his sales pitch, although the show is great!: “We are two Industrial-Organizational psychologists who care about how to make work a healthier experience for everyone. We run a bi-weekly podcast to bring the science directly to your ears.” |

| HRchat with Bill Banham | “…get insights, strategies, and anecdotes to help you get more from the world of work. Show topics covered include HR Tech, Leadership, Talent, Recruitment, Employee Engagement, Recognition and Company Culture.” |

| The Indigo Podcast with Ben Baran and Chris Evertt | “Take control of your life, business, and career once and for all. The Indigo Podcast offers raw, unfiltered, and science-based talk about flourishing in life. Join CEOs, executives, and regular folks in learning how to build a better future at work and beyond.” |

| Mind Your Work with Nicholas Bremner and Jose Espinoza | “Mind Your Work is a podcast about understanding the human aspects of work through science. We live in an interesting time. Employers are paying more attention to their people than ever. Employee wellness is now considered important. What makes a good career has changed from “a stable job” to “inspiring work”. At the same time, research on people at work is growing faster than ever. Employers are working on how to best use science to attract, retain, and engage employees. But what difference does all this make?” |

| TyePod with Tianna Tye | “Thanks for tuning into TyePod, the go-to podcast for entrepreneurs building and leading teams. Your host, Tianna Tye, aims to bring you research-backed and practice validate tips, tools, and techniques to build a team that you can trust.” |

| WorkLife with Adam Grant | Adam Grant is a somewhat polarizing figure in I-O. He’s arguably the most visible I-O psychologist on the planet, and has done a lot of good with that platform, but also falls pretty firmly in the category of “populist” these days, for better and/or worse. Here’s the sell for his podcast: “You spend a quarter of your life at work. You should enjoy it! Organizational psychologist Adam Grant takes you inside the minds of some of the world’s most unusual professionals to discover the keys to a better work life. From learning how to love your rivals to harnessing the power of frustration, one thing’s for sure: You’ll never see your job the same way again.” |

| The Workr Beeing Podcast with Patricia Grabarek and Katina Sawyer | “Do you want a happier and healthier work life but don’t know where to start? Patricia and Katina, two bff organizational psychologists, share research and tips about workplace wellness and interview other leading experts in the field.” |

The post A New List of I-O Psychology Podcasts appeared first on NeoAcademic.

Related articles from NeoAcademic:

- Grad School: Online I/O Psychology Master’s and PhD Program List

- New Podcast: Interviews with Scholars of Organizational Behavior

- IO Psychology Journals That Are Fixing IO Psychology

- The Difference Between Industrial and Organizational Psychology

- SIOP 2018: Schedule Planning for IO Psychology Technology

Hiring an NSF Research Project Manager to Start Immediately 25 Mar 2021 4:00 AM (4 years ago)

Direct link to the application and formal job ad: https://hr.myu.umn.edu/jobs/ext/339848

As part of a recently-funded US National Science Foundation project in which we will be building an online virtual interviewing platform, my laboratory will be hiring a part-time project manager with work to do for roughly the next two years. Actual workload will vary week-to-week between 10 and 25 hours (less in the beginning and more later). The position requires a Bachelor’s degree in a scientific field (psychology and computer science are targeted in the ad, but a degree from a business school ion which you completed a research methods course would qualify).

The position would be ideal for someone who is taking a few years off of school after graduation while considering applying to graduate school and would be a great opportunity for such a person to get some funded research experience. Pay will likely be in the $15-19/hr range, depending upon qualifications, and the position can be entirely remote, even post-pandemic.

Applicants local to Minneapolis are preferred for some post-COVID role responsibilities, but this is not required. As the Twin Cities metro is almost 80% white, we are particularly hoping to encourage remote applications from people in under-represented and systemically disadvantaged groups, so remoteness will not be held against anyone. All required role responsibilities can be performed with home access to a high-speed internet connection (being remote could prevent a few hours a week of job duties in late 2021/early 2022, but that’s the extent of it, and we’ve already identified potential workarounds).

If you recently graduated and were thinking about grad school but weren’t quite convinced, this would be a great way to participate in a functioning lab and make a final decision. You’ll also have access to University of Minnesota infrastructure (such as database access, inter-library loan, Qualtrics, etc.) and would be able to conduct supervised but mostly-independent research using those resources, if you were so inclined.

Here is a direct link for interested applicants, and excerpts from the formal job ad follow: https://hr.myu.umn.edu/jobs/ext/339848

About the Position

The TNTLAB (Testing New Technologies in Learning, Assessment, and Behavior) in the Department of Psychology seeks to hire a part-time “Research Project Manager” (8352P1: Research Professional 1) responsible for providing research, office, and technical support within activities funded by a federal National Science Foundation (NSF) grant. The project involves the creation of a web-based virtual interviewing platform and the execution of two data collection efforts between 2020 and 2022. The position principally requires responsibilities in the management and administration of project personnel and secondarily in the completion of scientific research tasks, such as assigned literature review or data analysis. Specific job tasks vary over the phases of the project, and training on all such tasks will be provided as required. Candidate expectations are: (1) willingness to participate with and independently manage the time of a team of PhDs and graduate students; (2) demonstrated ability to communicate well via multiple modalities (i.e., phone, e-mail, Zoom); (3) track record of achieving assigned goals in independent work projects; and (4) must be able to work with a diverse participant pool. Position works closely with the Principal Investigator of TNTLAB in the Department of Psychology and the Principal Investigator of the Illusioneering Lab in the Department of Computer Science & Engineering, as well as graduate and undergraduate students working within each of those labs.

Major Responsibilities

- Research Team Coordination (35%)

- Maintain meeting minutes while attending project and laboratory meetings

- Assign and follow up upon assigned tasks with team members within online project management software (e.g., Asana) based upon team meetings

- Keep project teams on top of project goals and set timelines using appropriate modalities, to include e-mail, online project management software, and web conferencing (i.e., Zoom)

- Train and manage undergraduate research assistants to complete study coding tasks

- Administration, Documentation, and Reporting (20%)

- Maintain records and documentation associated with project using cloud-based software (e.g., Google Docs and sheets)

- Maintain integrity of confidential data (e.g., Google Drive, Box Secure Storage)

- Assist with IRB processes, including the preparation of submissions and responses to IRB when requested

- Study Participant Management, Communication, and Support (20%)

- Enroll participants into focus groups and other data collection efforts

- Run online (e.g., with Zoom) and, if feasible, in-person focus groups

- Maintain participant records

- Manage payments to research participants

- Provide technical support to research participants facing difficulties using study software

- Provide opinions and input to technical team on the usability of developed study software

- Office and Financial Support (10%)

- Keep online document storage organized and well-documented

- Keep track of project expenses

- Conduct research on the internet to answer technical and process questions from the project team as needed

- Research Support (10%)

- Various short-term research tasks as needed, including conducting literature reviews under the supervision of project team members

- Creating submission materials to be submitted to the Open Science Framework to preregister study hypotheses and research questions

- Data Analyses and Presentation (5%)

- Create statistical, graphical, and narrative summaries of data

- Regularly (e.g., weekly) present data collection progress with such data summaries to the research team, generally via e-mail or web conferencing (i.e., Zoom)

Essential Qualifications

- BA/BS in a scientific field of study, such as Psychology or Computer Science, or a combination of education and work experience equal to four years;

- Demonstrated ability to work independently in a research environment and assume responsibility for project performance;

- Requires work on evenings and weekends during some project phases;

- Comfortable communicating with people and organizing the work of others;

- Able to allocate 10-25 hours per week through at least the end of August 2022.

Preferred Qualifications

- BA/BS coursework in both Psychology and Computer Science;

- Experience working with both Psychology and Computer Science faculty as a research assistant;

- At least one year of experience working as at least a half-time research study coordinator or project manager;

- At least one year of experience recruiting research study participants, collecting data, managing purchases/expenditures, providing documentation for IRB audits, and managing study logistics such as creating study manuals;

- Ability to empathically connect with participants, and understand their needs and concerns;

- Knowledge of research ethics and IRB rules and policies concerning the recruitment of research subjects;

- Knowledge of research design and methods commonly used in psychology;

- Organizational, time-management, decision-making and problem-solving skills;

- Leadership skills.

Diversity

The University recognizes and values the importance of diversity and inclusion in enriching the employment experience of its employees and in supporting the academic mission. The University is committed to attracting and retaining employees with varying identities and backgrounds.

The University of Minnesota provides equal access to and opportunity in its programs, facilities, and employment without regard to race, color, creed, religion, national origin, gender, age, marital status, disability, public assistance status, veteran status, sexual orientation, gender identity, or gender expression. To learn more about diversity at the U: http://diversity.umn.edu.

The post Hiring an NSF Research Project Manager to Start Immediately appeared first on NeoAcademic.

Related articles from NeoAcademic:

- Internet Scraping for Research: A Python Tutorial for Psychologists

- Call for Participants in NSF Proposal to Integrate Social Media in Undergraduate Education

- How Will #MTurkGate Affect the Validity of Research?

- Grad School: How Do I “Get Research Experience” for an I/O Psychology Master’s/Ph.D.?

- Hiring Managers Fear Being Replaced by Technology

Psychology, Technology, and Incomplete Theory 27 Jan 2021 1:34 PM (4 years ago)

Although I’m now an industrial-organizational psychologist, that was not always the dream. As early as 5 years old, I wanted to do something involving computers. At that time, this mostly involved making my name appear in pretty flashing colors using BASICA. But that interest eventually evolved into a longer-term interest in computer programming and electronics tinkering that worked its way into a college Computer Science major. Starting around the same time, also from that early age, I decided I wanted to be a “professor,” mostly because I found the idea of knowing more than everyone else about something very specific quite compelling. I even combined these interests by designing a “course” in BASICA – on paper – around age 8 and then trying to charge my father $0.10 to register for each class (a truer academic calling I’ve never heard). My obsession with both professordom and Computer Science persisted until college, when a helpful CS professor told me, “the pay is terrible and everyone with any talent just ends up in industry anyway.”

Those events in combination rather firmly pointed me away from CS as a career path, yet my interest in technology still grew, and my drive toward professorship remained. Throughout the end of college and all during grad school, I continued to tinker and continued to teach myself programming, trying to find ways to integrate my interest-but-not-career in technology with my career in psychology research.

I tell you all of this to emphasize this one point: I came at psychology a bit sideways, and that perspective has led me to notice things about the field that others don’t seem to notice. My goal was not and has never been to learn how psychology typically approaches research and to master that approach; it has been to better understand the things I find interesting, using whatever the best methods are to do so. The things I find interesting tend to be about the psychology of technology and the use of technology in psychological methods. Yet the best approaches used to study either, I realized, were not commonly being used in psychological research.

This is partially a philosophical problem. Psychologists using traditional psychological methods are typically focused on psychological problems – which constructs affect which constructs, what is the structure of mentral processes, etc., etc. And there’s nothing really wrong with that. Where I noticed psychologists get in trouble is when they try to study technology, something decidedly not psychological, and treat as if it is just like any other psychological thing they’ve studied. The obvious problem, of course, is those are not at all the same.

Let me walk through an example from the study that started me down this path – my dissertation. It was a meta-analysis quantifying the differences between traditional, face-to-face instruction and web-based instruction. I naively asked, “Is there a difference between the two in terms of instructional effectiveness, on average?”

In hindsight, I now recognize this as a poor question. It presupposes a few ideas that are unjustifiable. First, it presupposes that both “face-to-face instruction” and “web-based instruction” are distinct entities. It assumes those labels can be used to meaningfully describe something consistent. Or put another way, it presupposes the existence of these ideas as constructs, i.e., that same applying-psychology-to-not-psychology problem I described above.

The second presupposition builds on the first. Assuming those two classes of technology can be treated as constructs, i.e., that any particular version of a technology is simply an instantiation or expression of the construct, those constructs must each therefore have effects, and those effects can be compared.

Do you see the problem? In traditional psychological research, we assume that each person has consistent traits that we can only get brief, incomplete glimpses of through measurement. When I refer to someone’s “happiness,” I say so understanding that a person’s happiness is an abstraction, the effects of neural activity that we cannot currently measure and probably will be unable to for decades, or perhaps much longer. We instead recognize that in lived human experience, “happiness” means something, that we as a species have a more-or-less joint understanding of what happiness is, that we make this joint understanding explicit by defining exactly what we mean by the term, and that indicators of happiness, whether through questionnaire responses or facial expressions or Kunin’s Faces scale or whatever else, provide glimpses into this construct. By looking at many of these weak signals of happiness together, by applying statistical models to those signals, we can create a number that we believe, that we can argue, is correlated with a person’s “true” happiness, i.e., “a construct valid measure.”

Psychologists often try to do the same thing with technology, yet none of this reasoning holds. There is no abstraction. There is no construct-level technology that exists, causing indicators of itself. There is no fundamental, root-level technology embedded deep within us. Technology is literal. It exists as it appears. It has purposes and capabilities. And those purposes and capabilities were created and engineered by other humans. These ideas are so overwhelmingly obvious and non-provocative in other fields that no one even bothers writing them down. Yet in psychology, researchers often assume them from the get-go.

These realizations served as the basis of a paper I recently published in Annual Review of Organizational Psychology and Organizational Behavior, along with one of my PhD students, Sebastian Marin. The paper challenges the psychologization of technology, and it tracks how this psychologization has affected the validity of theory that the field produces, usually for the worse. We map out three paradigmatic approaches describing how psychologists typically approach technology – technology-as-context, technology-as-causal, and technology-as-instrumental. We explain how these three approaches either artificially limit what the field of psychology can contribution in terms of meaningful, practical theory or are outright misleading. And there is a lot of misleading technology research in psychology, field-wide (don’t get me started on “the effects of violent video games”!).

The bright side? We identify a path forward: technology-as-designed. Researchers within this paradigm understand that it’s not the technology itself that’s important – it’s the capabilities those technologies provide and the ways people choose to interact with those technologies. It’s about humans driving what problems can addressed with technologies, and how those humans and other humans redesign their technologies to better address those problems. It discourages research on “what is the effect of technology?” and redirects attention toward “why?” and “how could this be better?” It turns us away from hitting every technology nail with the psychology hammer. We need newer, better, and integrative approaches that consider the interaction between design by humans and human experience.

The field of human-computer interaction researchers tries to do this, with varying levels of success. I think we see such variance because many HCI researchers are deeply embedded in computer science. A background in computer science appears to cause the opposite of the problem that psychologists have. As technology is concrete and literal, they often assume humans are too. When humans turn out to be squishy, such research becomes much less useful. Both fields need to do better.

Some psychology researchers have already turned toward a more integrative perspective, but they are sparse. This is a shame, because psychology research within the causal and instrumental paradigms we identify is nigh uninterpretable, and this approach appears to be the default.

In their research, a lot of people ask, “What is the effect of [broad class of technology] on [group of people]?” and do not probe further. This technology-as-causal perspective is simply, plainly not useful. It rarely does or should inform decision-making in any meaningful context. It is often completely uninterpretable and almost always lacks generalizability. Such work is a waste of resources. Unlike a fine wine, this kind of research rots with age. It benefits no one, and we should stop doing it immediately.

If these ideas are of interest to you, or if you want to be part of the design revolution (!), check out our Annual Review paper directly. It’s available for free download as either text or PDF through this link.

The post Psychology, Technology, and Incomplete Theory appeared first on NeoAcademic.

Related articles from NeoAcademic:

- Overemphasis on Theory Development Is Damaging Organizational Psychology

- NEW EDITED BOOK: Cambridge Handbook of Technology and Employee Behavior

- I’m Writing a TIP Column! Crash Course in I-O Technology

- SIOP 2018: Schedule Planning for IO Psychology Technology

- Stats and Methods Urban Legend 1: Formative Measurement

Trustworthy I-O Master’s and PhD. Program Rankings 1 Dec 2020 3:46 PM (4 years ago)

Grad School Series: Applying to Graduate School in Industrial/Organizational Psychology

Starting Sophomore Year: Should I get a Ph.D. or Master’s? | How to Get Research Experience

Starting Junior Year: Preparing for the GRE | Getting Recommendations

Starting Senior Year: Where to Apply | Traditional vs. Online Degrees | Personal Statements

Alternative Path: Managing a Career Change to I/O | Pursuing a PhD Post-Master’s

Interviews/Visits: Preparing for Interviews | Going to Interviews

In Graduate School: What to Expect First Year

Rankings/Listings: PhD Rankings | Online Programs | Trustworthy Master’s and Ph.D. Rankings

Having written my grad school series, one of the most common questions I get is, “Which graduate programs should I apply to?” What people generally want to know is some variation of this question:

- What are the best IO psychology graduate programs?

- What is the best IO psychology master’s program?

- What is the best IO psychology PhD program?

- Which IO psychology programs will get me a good job?

As I’ve explained on this blog before, evaluating program quality a complicated problem. It is difficult even for those of us in the field to agree on what “best” means; it is a worse problem for you as a prospective student. Fortunately, the advice is more straightforward: in the end, you should evaluate which schools offer what you want out of them as a student. Don’t look for “the best”; look for the best for you. That means compiling information from multiple sources, including multiple rankings, and keeping track of how each program matches you, as an individual.

Because trustworthy rankings tend to get buried on various websites over time, whereas spam rankings seem to live forever, I decided to start a running list of all current trustworthy rankings of IO programs, splitting them by the year they were released. This way, you can get the most recent information available but also see historic rankings and how they’ve changed. Again, you should not rely upon any single ranking system to determine where you apply, but consulting multiple ranking systems, seeing how they agree and don’t, and then weighing that against your own priorities is a great way to narrow down the field of options. “What is the best IO psychology master’s program” or “what is the best IO psychology PhD program” has a different answer for every prospective student.

By the way, I’m defining “trustworthy” as based upon empirical data, reporting a transparent ranking methodology, and where a reasonable argument could be made for construct validity of “program quality.”

Without further ado, here is the list.

- Ph.D. Rankings

- 1997

- Princeton Review (no clue about the methodology on this one, so “trustworthy” may be a bit of a stretch, but it is a classic)

- 2004

- 2013

- 2014

- 2017

- 2018

- 1997

- Master’s Rankings

- 2004

- 2018

- Acikgoz et al.’s Student and Alumni Perceptions Rankings

- Roman et al.’s Student Perception Rankings

- Vodanovich et al.’s “Objective Data” Rankings, mostly based upon applied experiences and student accomplishments

As I alluded to earlier, there are many rankings on the internet that are… let’s say “stupid” which I have chosen not to include here (I’m looking at you thinly veiled attempts to earn commissions by sending people to online programs at Capella, Walden, and/or Phoenix). Most of these are based on nonsensical metrics (e.g., one Ph.D. ranking I found was based on tuition cost, which is misleading in several different harmful ways).

If you know of other rankings you think I should include or would like my opinion on a ranking you found, drop it in the comments!

The post Trustworthy I-O Master’s and PhD. Program Rankings appeared first on NeoAcademic.

Related articles from NeoAcademic:

- Grad School: Sortable I/O Psychology Ph.D. Program Rankings

- Grad School: Online I/O Psychology Master’s and PhD Program List

- Grad School: Pursuing an I/O PhD with an Existing Master’s

- Grad School: What Will My First Year of an I/O Master’s/Ph.D. Program Be Like?

- Grad School: Managing a Career Change to I/O Psychology

IO Psychology Video Content at #APA2020 6 Aug 2020 3:02 PM (4 years ago)

The annual meeting of the American Psychological Association has moved fully online this year, and it’s actually pretty good – the online platform is easy to search and use, making finding interesting content very straightforward. They have a live program running for 3 days that you can tune into, plus virtual content that will be available for about a month. All pretty cheap, as low as $15 for student APA members to attend.

The virtual content includes a full program put together by SIOP: 1 invited address, 5 symposia, 1 discussion (panel), and 34 posters. Much of this content is in video, which personally I believe is necessary for a decent conference experience! So given that, I wanted to promote this portion of the SIOP program. Also, I gave the invited address! So it’s definitely a little selfish too. Here’s all the content you can experience on video from Division 14 (but check out the other divisions too!):

Invited Address

- How Can Artificial Intelligence Be Used to Better Ask and Answer Research Questions? by Richard Landers

Symposia

- Careers in Undergraduate I/O Psychology by Brian Loher, Kristian Veit & Abby Mello

- Toxic Workplaces and Abusive Leaders by Sobia Nasir, Ozge Can, Adam Chittenden, Benjamin Thomas, Parent-Lamarche Annick, Fernet Claude & Stephanie Austin

- Are Generational Categories Meaningful Distinctions for Workforce Management? by Nancy Tippens, Eric Anderman, Dana Born, Brian Hoffman, Arne Kalleberg & Julia Schuck

- Engagement in the Workplace by Zorana Ivcevic, Julia Moeller, Zehabit Levitats, Eran Vigaoda-Gadot, Dana Vashdi, Vignesh Murugavel, Roni Reiter-Palmon, Joesph Allen, Isaac Lundquist, William Kramer & Crista Taylor

- Legal Updates for I/O Psychologists: Analyzing Recent Supreme Court Cases and Workplace Implications by Eli Noblitt & Kathy Rogers

Discussion/Panel

- What’s Happening at APA and SIOP? Q&A with Division 14/SIOP Council Representatives by Tammy Allen, Jeff McHenry, Patrick O’Shea & Sara Weiner

Posters

- Generation Z at Work: Difference Makers Demanding Rewarding Experiences, Challenge, and Leader Mentoring by Yvette Prior

- Closing the Gap Between Theory and Practice: Offering I/O Master-Level Internships Online by Maria Antonia Rodriguez

- Exploring Corporate Communicative Competence and Upgrading Communicative Skills in the Bilingual, Multicultural, and Digital Kazakhstan by Sholpan Kairgali

- African-American Women Government Contractors’ Experiences of Work Engagement by Rajanique Modeste

- Examining the Process of Men Successfully Mentoring Women in Nontraditional Occupations by Amy Foltz

- Do Social Class Background and Work Ethic Beliefs Influence the Use of Flexible Work Arrangements? by Anna Kallschmidt

- Work-Family Conflict and Professional Conferences: Do We Walk the Talk? by Vipanchi Mishra

- The Practice of Mindfulness, Coping Mechanisms on Mental Fatigue by Lisa Thomas

- In the Heat of the Moment: Using Personality as a Predictor of Firefighter Decision-Making by Chance Burchick

- Factors That Influence the Tenure of Direct Support Professionals in the Intellectual and Developmental Disabilities Field by Hirah Mir

The post IO Psychology Video Content at #APA2020 appeared first on NeoAcademic.

Related articles from NeoAcademic:

Let’s Conference: A SIOP 2020 Livestreaming Event on June 22 15 Jun 2020 1:27 PM (4 years ago)

UPDATE: If you missed the live event, you can still check out a recording here! This will probably only stay posted for a few weeks, so get it while you can!

If you’re in the gaming space, or if you have a kid whom you’ve watched over their shoulder while they played Minecraft, you’ve probably seen a Let’s Play.

Let’s Plays are live, narrated explorations of video games. A person plays the game but also provides a bit of think-aloud as they play, and people watch in real-time. “That was weird!” “That was cool!” “Here’s what I’m planning to do next.” It is a sort of a combination of think-aloud and voyeurism?

If you’ve ever heard of the media streaming service Twitch, it’s devoted to Let’s Play livestreams. The audience also can post text contributions to the livestream, which the streamers see and respond to (or don’t).

With that background, I am proud to announce that alongside Tara Behrend, we will be hosting Let’s Conference: Virtual SIOP 2020. In the event, we’ll be live-watching SIOP video sessions on Monday, June 22 from around 2PM EDT / 11AM PDT through 6PM EDT / 3PM PDT and providing commentary. Maybe. We might end early if we get tired of doing it for whatever reason, no promises.

Let me first be perfectly clear. Although Tara and I are both highly involved in the SIOP organization, this is not an official, recognized, or even clearly allowed thing we’re doing. We’ve gone rogue! ROGUE I SAY!

So what’s the schedule? That’s still being finalized, but here’s the general docket:

- The interesting parts of the plenaries.

- Random friends and other colleagues whom we are pretty sure wouldn’t mind us ripping into them but we aren’t going to ask them ahead of time just to be safe.

- GRAD STUDENT FRESH MEAT. Ok, just kidding. We will probably watch some grad students at some point by watching symposia that sound interesting, but rest assured it will be constructive and thoughtful, unlike commentary on friends whom we will be destroying just because we enjoy that sort of thing, you know who you are.

- Anyone who wants to be! If you’d like your video to be included, please comment below and we’ll try to get your session on the roster!

- Suggestions of live audience members! Because Let’s Conference will be a live experience, we will be watching audience comments and taking your advice when we feel like it.

In between sessions, we’ll be chatting about what we learned, what we didn’t, and discussing audience comments/questions.

You might ask why we’re doing this… to you I say, why not!?!

When the show goes live, you’ll find it here on YouTube Live. Here’s a tentative schedule, although there’s basically zero chance we will actually get to all of these sessions:

- Opening Plenary

- Scott Tonidandal – Conference Welcome

- Georgia Chao – Introduction to Eden King

- Eden King – Outgoing President’s Message

- SIOP Foundation Visionary Circle Presentation

- Naval Gazing Hour

- 0005: Pay Equity Analysis: Hey, Labor Economist—Anything You Can Do I-O Can Do Better! (Master Tutorial)

- 0912: SIOP Select: Revising the APA Ethics Code to Better Fit the Roles of I-O Psychologists (Special Event)

- 0446: Know Your Worth: Results From the 2019 SIOP Salary Survey (Alternative Session Type)

- Poster Break 1

- Just a Look Around

- Social Justice and Current Events Hour

- 0002c: COVID-19 – Selection & Testing

- 0137: Applying I-O to Healthcare: Opportunities for Research and Practice (Ignite)

- 0329: Where Are the Men? Bringing Men Into the Mix to Push for Workplace Gender Equality (Symposium)

- 0015: WHAT Is Inclusion? Its Past, Present, and Future (Special Event)

- Poster Break 2

- Deep Dive into Technology/Gamification/Social Media/Simulations

- Methods Hour

- 0815: Using MetaBUS to Locate, Synthesize, and Visualize I-O Research Findings (Master Tutorial)

- 0016: Life in the Fast Lane: Advances in Rapid Response Measurement (Symposium)

- 0207: Excel Can Do That Too? Five Fantastic Functions for I-Os (Ignite)

- 0868: Trust in AI (Panel Discussion)

- Poster Break 3

- Deep Dive into Testing/Assessment/Selection Methods/Validation

- Closing Plenary

Go ahead and click over now to set a reminder for yourself to watch the live show. To participate, you’ll need a computer or mobile device capable of watching YouTube. See you there!

The post Let’s Conference: A SIOP 2020 Livestreaming Event on June 22 appeared first on NeoAcademic.

Related articles from NeoAcademic:

Avoiding Wrist Pain and Carpal Tunnel Syndrome During COVID-19 Isolation 22 Mar 2020 8:00 AM (5 years ago)

If there’s one thing that white-collar workers around the world suddenly have in common because of COVID-19, it’s that we are very suddenly tied to our keyboards, even more so than before. And some of us were very tied to our keyboards!

Unfortunately, as you probably suspected, sitting in front of a computer keyboard for 8 hours a day is not particularly good for your health.

The bigger rules about using a computer at a desk I feel are more common knowledge. Drink lots of water. At least once an hour, stand up and walk around for at least 10 minutes, and either go outside or look out windows into the horizon. Sit with good posture.

These things seemed obvious to me. What was less obvious is what to do about my wrists. If a high proportion of your job is about writing, you’ve probably already faced this problem, although you might not have acted on it already.

Anywhere between a few days to a few years into typing 8 hours a day, you will begin to get random wrist pain. At first, it’s just a twinge every once in a while. A moment where you need to rub your wrist and think, “That never did that before.” Next, it’ll be mild throbbing but only at the end of the day. Then you’ll notice it more throughout the day until it becomes unbearable. These are all signs that you’re on the path to permanent wrist issues, and at the end of that road is surgery!

As an academic who spends many hours a day writing in front of a computer (and even more now that all of my teaching is at home too!), I know this road well. That is what led me to research what I can do to ensure my home office (and eventually my real office, in the magical future where we aren’t sheltered-in-place anymore) is designed ergonomically, to ensure my future wrist health for years to come.

If you don’t do these things, the best case is that you’ll have moderate but unnecessary wrist pain. The worst case is carpal tunnel syndrome, which is marked by tingling pain and throbbing in your fingers and wrists and eventually, surgical correction. So take steps now to set yourself on a better path.

Here are six general recommendations, some free and some not. At a minimum, you should do #1, #2 and #3 today!

- Take breaks. As I described earlier, for your general health, you should at least once an hour stand up and walk around for at least 10 minutes (to reduce the chance of blood clots or other heart-related issues), and using that time to go outside or look out windows to the horizon (to reduce eye strain). Another benefit to such breaks is that you give your wrists some time off! Let them just hang loosely and relax while you wander around – don’t hold your phone!

- Stay flexible. One of the easiest and cheapest things you can do to reduce wrist pain is to increase wrist strength through basic physical therapy that you can complete at your desk. Every day, and especially every time you feel that little wrist twinge, spend a few minutes out these wrist exercises.

- Sit at an appropriate height relative to your keyboard. Chair height is a very tricky thing to get right. In addition to sitting with good posture, you should be sitting at a height so that your hips are level or very slightly below your knees, which takes the pressure off the arteries in your legs (important for avoiding blood clots!). But it’s tricky because you should simultaneously maintain a 90-degree (right) angle or larger at the elbow, meaning that your arms should angle down, away from you. See these diagrams from Cornell. In practice, it is next-to-impossible to do this if your keyboard is on a desk. Instead, you likely need a keyboard tray that hangs below the level of your desk, like this one or this one. These trays come in a variety of types, but the main thing to consider: do you want to bolt one to your desk, or do you want one that clamps on?

- Use a vertical mouse or trackball. You might think that mice are all the same, but ergonomic mice are vertical, meaning that you hold them in a more natural angled wrist position instead of flat. Try this: place your hands loosely in your lap and notice what your wrist does – you will notice that the top of your hand angles slightly outwards. Thus, an ergonomic vertical mouse will replicate this angle to avoid wrist strain. I personally use this one by Anker but in the wired version, which has been great (and was cheap), but Logitech has its own design if you prefer a more familiar brand name (although Anker is mainstream among the tech crowd!).

In contrast to vertical mice, trackballs have always been sort of a weird cousin to mice more broadly and that’s still true today. Nevertheless, they are available in a wide variety of styles, some combined with traditional desk-mouse tracking and some not, which tend to be a bit more ergonomic (i.e., they have wrist tilt). There are even handheld versions that you can use without a desk! - Use a split keyboard with lifters and mechanical switches. Ok, so a lot of new concepts with this one. Split keyboards are keyboards where the left and half sides are split into two physical pieces, which allows you to place them wherever you want – typically slightly angled out to match the natural position of your wrists when sitting with good posture.

Lifters allow you to angle the keyboard to match your natural wrist angle horizontally, the same way a vertical mouse does.

Mechanical switches are individually actuated so that there is “give” when you press each key; the key gradually firms up as you press it further down, which gives a bit of a “bounce” to your typing and softens the impact on your fingers (one hard key impact doesn’t matter – tens of thousands of presses over the course of a day does). Mechanical switches are in contrast to a “membrane key” switch, which is what you typically find in laptops – the top of the key and bottom of the key are very close together, so every tap is a pretty hard hit.

It’s actually surprisingly difficult to find all three of these features in one keyboard at a decent price, so in order of priority, I would suggest split, then mechanical, then lifters.

If you do want to get all three, I have two suggestions. The first is the keyboard I use, the Kinesis Pro with Cherry MX Mechanical Switches, plus its matching lifters. If you instead want to dive off the deep end into the world of absolute keyboard customizability, I would suggest the ErgoDox EZ. The ErgoDox EZ was actually a kickstarted project by a guy named Ergo to create the “perfect” split, lifted, mechanical keyboard. It is intended for “power users” and even requires some time to retrain yourself to use it. It honestly seems like a bit of a cult.

One thing that surprised me when getting into ergonomic computer accessories was just how pricey they were, which is largely driven by the mechanical switches – they must each, one per key, be manufactured and installed separately, which drives the price way up, as does the two-piece form factor. So if you don’t mind sacrificing the switches and keeping it all a single unit but maintain some of the benefits of the angles, the Microsoft Sculpt has been popular lately, as has the Perixx. - Use mouse and keyboard wrist support rests. If you have proper posture and use a keyboard tray, this is less important, but I would still suggest a good set of pads. One of the worst things you can do is rest your wrist along the corner of your desk (pinching nerves and blood vessels), and shortly behind that is resting your wrist on the hard surface of your desk rather than floating in midair above your keyboard. In either case, a good wrist rest will provide insurance that you won’t accidentally rest your wrists somewhere where they’ll be pinching something important. And fortunately, rests are pretty cheap insurance!

That’s it! Good luck and happy typing in your new COVID-19 telework job!

The post Avoiding Wrist Pain and Carpal Tunnel Syndrome During COVID-19 Isolation appeared first on NeoAcademic.

Related articles from NeoAcademic:

Should You Worry About Cheating on Online Tests During COVID-19? 19 Mar 2020 9:04 AM (5 years ago)

One of the depressing yet unsurprising outcomes of the COVID-19 crisis has been the increase in concern about online test security. People are worried students will cheat on their tests.

I definitely understand this concern. Like many instructors who have taught online for a while, it is one of many issues I’m hearing about fresh once again, after having worried about and resolved it over a decade ago.

I perhaps naively thought of online testing as one of those things that “more or less resolved” at an institutional level, when clearly in-person instructors have no clue what we’ve been doing online all this time.

I’m also reminded that people really believe the test security measures they use in person are effective. Let me be really clear here: they aren’t. If a student wants to cheat, they’ll find a way, and you probably won’t be able to stop it. Research in this area suggests over 50% of college students cheat on an exam or test at some point, and most cheating occurs in-person because most teaching occurs in-person. Or at least, it did before COVID-19.

When people worry about the shift online increasing cheating, what they are really saying is, “If not for my tight exam security practices in person, cheating would be rampant!” The reality is that your exam security practices already don’t work that well. No one’s do! If someone wants to cheat, they’ll find a way, and it doesn’t particularly matter whether they’re in your classroom or in front of their laptop screen. With more resources, they might be slightly better at cheating, but individual integrity is a far better predictor of whether someone will try to cheat within a given testing context than security behaviors.

Consequences aren’t the sole prevention method for immoral acts. If homicide became legal tomorrow, would most people start murdering everyone around them? A violent minority probably would, but most people wouldn’t, because murder is morally wrong. Society judges you rather harshly for murder!

The same pattern applies here, with the addition of one caveat. Cheating is immoral, and that alone prevents most people from cheating. But when an onslaught of new situational cues overpowers cultural norms, e.g., if you have screaming kids at home under quarantine preventing you from studying as much as you want to, you might be tempted into cheating when you otherwise would not be.

That means your real responsibility as an instructor is not really to implement cheating-prevention techniques but to make cheating unattractive in relation to the morality violation that cheating would create. If you implement generous time limits, reset exams freely when technology problems occur, allow a week span to take the exam, allow open-book exams, and so on, you are actively removing factors that make cheating attractive.

For those worried about grades increasing, let me tell you a story. Like many others, when I moved my in-person class online with an identical test (combo of multiple choice and short answer; same questions), and then later explicitly made that same test open-book, the grade mean and distribution did not change. At all. In fact, the test means were all within 2 points of each other (out of 100) between all three times!

Perhaps the best way to say this: cheaters gonna cheat. You don’t need to worry about them online any more than you worried about them in person.

There are things you can do to decrease the attractiveness of cheating while simultaneously improving your students’ learning. For example, deploy more difficult, highly thoughtful exams that are clearly and unambiguously communicated to students as open-book, open-note. My own favorite approach? Create high-complexity multi-component group projects that require demonstration of capabilities gained during the course. Not only do you get to see your course material come to life through what they produce, but you also have less to grade!

Above all, remember that your overall goal here is to encourage students to take your material seriously and to learn. “Preventing cheating” is a very small part of that charge and not worth nearly as much time as either better pedagogy or helping students manage their stress in this time of crisis.

And one final point: inflicting Respondus on your students halfway through a semester in the middle of a pandemic will help literally no one!!!

The post Should You Worry About Cheating on Online Tests During COVID-19? appeared first on NeoAcademic.

Related articles from NeoAcademic:

Tips for High-Quality Audio/Video in the Age of COVID-19 18 Mar 2020 2:01 PM (5 years ago)

Most pressure by teachers, instructors, professors, and trainers to develop and release high-quality online materials instantaneously in this age of self-isolation is self-imposed. Most full-time online instructors put six months to a year of effort into their online courses before they run an online course for the first time. You really don’t need to fake the kind of production values and planning that come out the work done with that sort of lead time. Don’t try, because you’ll just end up driving yourself into a wound up ball of anxiety.

Having said that, one area that people find very frustrating to deal with but is relatively easy to fix is audio and video quality. If you’re moving to a primarily Zoom, YouTube Live, or Twitch teaching or videoconferencing solution, this is something you should spend at least a few minutes thinking about. If your students and colleagues just see you as a blur, you might as well not show yourself at all, and they can’t hear you, you might as well communicate solely through email.

If you’re already having audio-video problems, I’m guessing you probably have this sort of startup process for online synchronous teaching:

- Sit down in an office chair with a laptop on a table in front of you wherever seems convenient.

- Open Zoom/OBS/whatever

- Click Record / Start Meeting

Blasphemy! To make matters worse, your camera/microphone solution is probably something like this:

Quelle horreur!

Putting yourself at the mercy of the light and audio situation of wherever you happen to sit down plus using a laptop webcam is a formula for video you’re not happy with.

Laptop cameras in particular are usually designed for the sole purpose of allowing the laptop manufacturer to claim that their laptops have webcams in them, not to actually produce high quality video.

Fortunately, there are two sets of things you can do to improve your audio and video, and both are quite easy: optimize your recording space and invest in hardware upgrades.

Optimize Your Recording Space

Without training in videography, it can be a bit difficult to understand why the space you record in is so important. But think of it this way: why would film studios develop entire custom-designed buildings if they could just always record outside? Why do soundstages exist?

The answer is that most existing indoor spaces are minefields of weird problems for video and audio recording. You can without realizing it end up with grainy video, stuttering audio, background noise, or a host of other problems.

Fortunately, you can also just follow the list of recommendations below to avoid most of these problems. Following these recommendations will increase even the quality of laptop webcams pretty dramatically, perhaps even to the point that you don’t need to buy a new webcam to get the quality level you want. High quality cameras, in a sense, give you greater flexibility and leeway for recording in non-ideal recording conditions. But if you can create perfect recording conditions, then your camera may not matter quite so much (to a point!).

Here are two general procedures to follow, to optimize for the two issues you’re trying to address.

Maximizing Video Quality

For all of this, I’m assuming you want to record on a laptop. If you only have a desktop, you have fewer options. Before you check the steps below, open your laptop so that you can see your own camera (e.g., the Camera app on Windows 10) and then move around the space you have for recording until you can satisfy as many of these conditions as possible.

- You will want to record (and test right now!) during the middle of the day, in a room with a window, whether it’s cloudy or clear out. If you find yourself needing to record in the evening, night, or early morning, you may want to invest in some indirect studio light kits like these.

- Find a room or space with bright indirect light, where you can sit with that light mostly in front of you, and preferably slightly to the side. For example, you might sit directly in front of a window but then turn your laptop and body about 10 degrees to the left or right. Indirect light means that there’s not a light source shining directly on any visible surfaces – think about why we use lamp shades instead of bare bulbs, because it diffuses direct light into indirect light. I personally use a room where I can turn toward a south-facing window with a transparent window shade (which I keep closed on sunny days and open on cloudy days).

- Ensure that there is no direct sunlight shining in frame and no reflective surfaces. Direct sunlight and reflections of the sun will blind your camera and kill picture quality.

- Ensure there are no light sources behind you. This means no backlighting. In rooms with lots of windows, this can be a challenge.

- Once you’ve found this space, look at the video feed and check for uneven lighting. Your webcam software will use a lot of tricks to try to compensate for too little or too much light, but it is very bad at doing this if your lighting is not consistent all the way across the frame. So if you see any bright or dark spots, try to get rid of them, either by moving or rearranging the room.

- Once you’ve done this, check how much the color of your face is being affected by the color of your screen. Most of the time, you’ll have lots of white background visible, which will cause your face to appear somewhat blue in relation to the things around and behind you. The easiest solution to this problem is to put the laptop further away from you. If that’s not an option, install a blue-light management program like my personal favorite, Flux. Inside Flux, lower the temperature of white light on your computer screen until it is similar to the color of the world around you. For example, Flux will start at “full blue light”, which is around 6500K, so if the world around you is yellow-ish, perhaps try dropping it down to 4400K.

Maximizing Audio Quality

This is a little trickier, since you’ll need to record yourself and then listen to the recording to see how it sounds. Use headphones. I’d recommend the Voice Recorder app if you’re on Windows 10 (which comes pre-installed) for Step 3.

- Be absolutely silent, close your eyes, and listen for sources of constant or intermittent background noise in the space you’re in. A non-exhaustive list includes:

- Air conditioner/heater hum

- Ceiling fans

- Flushing toilets

- Water rushing through pipes

- Road noise from street-facing windows

- Open windows in general (close all windows!)

- Background conversations/chatter

- Your own computer’s internal fan

- To the extent that these sources of noise can be shut off or avoided by moving to another room, do so. For example, when I recorded videos for my online classes in my last place of residence, I would turn off the air conditioning at the thermostat during recording because the best place in the house for light was also right next to the furnace/blower. After an hour (and a 5-10 degrees warmer house), I’d turn it back on. Sometimes I would even set the thermostat 5 degrees below normal and let the whole house cool down before turning it off, to minimize discomfort during recording. If your laptop’s fan is too loud, try moving your microphone away from your laptop (which requires an external microphone).

- Once you’ve found a promising space, try recording yourself talking, and then listen to yourself speak on headphone with the volume way up. This is how many students will listen to you, so you should simulate that environment. It will also help you hear any remaining background noise more clearly. One other thing to listen for at this stage is echo. If you have echo, that means you probably have a lot of hard surfaces all around you. You can reduce this by either moving to a larger room or by putting a lot of soft surfaces in the room to absorb errant sound waves. Even lining the floor with pillows will help more than you might guess!

As a warning to perfectionists, you will not be able to eliminate 100% of background noise. This is where a high quality microphone, likes the ones I described above, will help, as they will do most of the rest of the job for you. It still won’t be perfect, but it can be very close. And if you’re recording your videos rather than live streaming, you may even have options depending upon your editing software to use adaptive noise reduction to eliminate even the faintest background hum.

All of these optimizations will allow you to take advantage of your hardware to its full potential. But what if your hardware isn’t that good to begin with? Well, that’s what the next section is for.

Invest in Hardware Upgrades

First of all, let me say that if you are on a limited or zero teaching budget, especially if you are underpaid adjunct, you should feel zero pressure to get new hardware. This will make the most sense to people who plan to teach online more in the future and want to build a portfolio of online teaching content, or those who are frequently in online video conferences where people can’t see or hear them clearly, or for those who have some extra teaching dollars available (hahahaha!).

As a preview of prices in the next sections, you can a nice quality microphone for $45 that will help more than any other single change you can make to your recording situation. If you want to trick out your recording space and quality, you can easily spend several hundred, and if you go really high quality, you can get to professional-grade and spend thousands. So there is a huge range, depending upon interest and resources.

Most fundamentally, two pieces of hardware will help more than anything else, and one will help more than the other.

Most Important: A Nice USB Cardioid Microphone

A high quality microphone is, above all else, the most importance piece of hardware you can upgrade. Laptop microphones are notoriously horrible in terms of quality – if you’ve ever heard someone that always sounded muffled and dropped in and out randomly, it’s probably because they were using a laptop mic.