How-To: Convert a WordPress site from Multisite to Standalone install 25 Jan 2016 10:29 PM (9 years ago)

Since wordpress 3.0, it is possible to create a network or site by using the multisite feature. It allows to manage multiple wordpress websites from a single installation. Plugins, themes get installed and maintained in one place, and the individual sites can activate them.

While multisite has its benefits, in my case, I wanted to return to a good old standalone install. I had a plan a long time ago to move multiple websites from drupal onto wordpress, but never got to it. Finally, I much prefer to handle the upgrade of 1 site on it own rather than upgrading all at the same time. While wordpress does a good job at making maintenance easy, I have been bitten a few time in the past by upgrades going bad.

Finally, one should also read WordPress: Create A Network” before going head down in setting it up.

Moving off a multisite install is not that straightforward, some of the resource will end up in different places, permalinks need to be fixed and some files need to be moved around.

Disclaimer

Before doing anything, make a backup of your site and try to run this tutorial in a VM or dev environment as you may need to adjust a few steps to fit your setup.

Also, to be noted, you should not run this on a live website as this will migration will cause downtime.

This worked for me, but the migration is rather manual and your mileage may vary. Please follow this steps with caution!

Getting started

Finding your site ID

Now that you have a backup, you will need to find which site ID you are migrating. You can get this from visiting http://example.com/wp-admin/network and find the site you are migrating off.

In this example, I was moving site ID 2.

Database

Exporting your DB

The database table you will want to export may vary depending on the plugins you have installed and used. Here, assuming your site had ID 2 and your db table_prefix was set to wp__, you will want to dump ALL tables starting with _wp_2_ along with wp_usermeta and wp_users, as they will contain the users from the multisite.

Here is a 1-liner that may help to get all the required data out assuming:

- DB name: mywordpressdb

- DB table prefix: wp_

- Site ID: 2

mysqldump -u root -p mywordpressdb $(mysql -u root -p mywordpressdb -N -s -e 'show tables;' | grep '^wp_2_') > mywordpressdb.sql

Fixing the DB

Now that we have the relevant tables, it is time to fix the content of the DB. sed is your friend here:

sed -i 's#wp-content/blogs.dir/2/files#wp-content/uploads#g' mywordpressdb.sql # Fix uploaded file locations

sed -i 's#wp_2_#wp_#g' mywordpressdb.sql # fix table names

sed -i 's#/files/#/wp-content/uploads/#g' mywordpressdb.sql # fix file upload permalinks

At this stage, you should have a database backup that should allow you to restore your wordpress site in standalone mode.

WordPress files

Assuming your wordpress install is in /var/www/wordpress, running the following command should copy the files that were uploaded while using a multisite set up to a standalone setup:

rsync -avz /var/www/wordpress/wp-content/blogs.dir/2/files/ /var/www/wordpress/wp-content/uploads/

rm -rf /var/www/wordpress/wp-content/blogs.dir/2/files

Importing standalone setup DB

The backup we made earlier should contain all the data we need, but to be on the safe side, we will let the wordpress installer bootstrap a new DB and then re-import the data.

Bootstrapping the DB

At this stage, I assume you have a brand new DB called mywordpressdb. To force the wordpress installer to run, we will move wp-config.php in another location and we will copy it back later.

Accessing http://www.example.com should now trigger the wordpress installer. Go through the install steps to setup the database.

Restoring DB

To restore the DB from our backup, we will use the mysql CLI:

mysql -u root -p mywordpressdb < mywordpressdb.sql

Fixing the config

We now need to fix the wp-config.php file by removing references to multisite config. Below is an example of the constants that need to be removed from wp-config:

--- wordpress.old/wp-config.php 2016-01-25 17:07:24.437572380 -0500

+++ wordpress/wp-config.php 2016-01-25 23:44:56.432183250 -0500

@@ -119,15 +121,6 @@

* in their development environments.

*/

define('WP_DEBUG', false);

-define('WP_ALLOW_MULTISITE', true);

-

-define( 'MULTISITE', true );

-define( 'SUBDOMAIN_INSTALL', true );

-$base = '/';

-define( 'DOMAIN_CURRENT_SITE', 'debuntu.org' );

-define( 'PATH_CURRENT_SITE', '/' );

-define( 'SITE_ID_CURRENT_SITE', 1 );

-define( 'BLOG_ID_CURRENT_SITE', 1 );

/* That's all, stop editing! Happy blogging. */

Finally, we need to copy wp-config.php back to /var/www/wordpress/

How-To: Prevent SPAM with Apache’s mod security 6 Nov 2014 5:31 AM (11 years ago)

WordPress is a great piece of software to run a blog, it is flexible, has tons of plugins are developed for it and updates are really easy to do. To fight spam comments, there is already the Akismet plugin that does a really good job.

While Akismet catches the spam comments and put them in a separate location, making it easy to delete them, as the number of spam grows, WordPress can take long to empty the purge the flush comments and the best option becomes to use a manual SQL query to flush them.

In this article, we will see how we can use RBL to prevent spammer from posting to WordPress’s comment page and at the same time, lift a bit of load from the server.

While the rules work for WordPress, with a bit of modifications, it will be easy to get this setup working for any kind of blog/website.

This setup was tested on Debian Wheezy, it is assumed that you already have a working Apache server and a working instance of WordPress running.

In a nutshell, what we are going to do here is to use ModSecurity to inspect the web requests, and check against some RBL if the IP that attempt to post a comment is a known spammer. If it is the case, we will deny access to the page, avoiding any access to Akismet service and the database.

RBL works by doing in DNS lookup for a special hostname, depending on the returned address, we will know if the IP is blacklisted or not. It is lightweight and subsequent calls will be faster as the DNS entry will be cached.

Installation

To be able to use the RBL functionality in ModSecurity, we need at least version 2.7, Debian Wheezy ships version 2.6.6 but luckily, the debian-backports repository has version 2.8.0. So the first step is going to enable Debian backports.

echo "deb http://http.debian.net/debian wheezy-backports main" > /etc/apt/sources.list.d/wheezy-backports.list

apt-get update

And then install libapache2-mod-security2:

apt-get install libapache2-mod-security2<br /> # a2enmod security2

Configuration

Now, edit your virtual host and add the following within the VirtualHost namespace:

<IfModule security2_module>

SecRuleEngine On

SecRequestBodyAccess Off

SecRule REQUEST_METHOD "POST" "id:'400010',chain,drop,log,msg:'Spam host detected by zen.spamhaus.org'"

SecRule REQUEST_URI "\/wp-comments-post\.php" chain

SecRule REMOTE_ADDR "@rbl zen.spamhaus.org"

SecRule REQUEST_METHOD "POST" "id:'400011',chain,drop,log,msg:'Spam host detected by netblockbl.spamgrouper.com'"

SecRule REQUEST_URI "\/wp-comments-post\.php" chain

SecRule REMOTE_ADDR "@rbl netblockbl.spamgrouper.com"

</IfModule>

And finally, check that the config is correct and if it is, reload apache:

apache2ctl -t

/etc/init.d/apache2 reload

What this does is to enable ModSecurity engine (SecRuleEngine On), to only look at the HTTP headers (SecRequestBodyAccess Off) and finally set the rules up. We have 2 similar rules checking 2 different DNSBL. If the first one does not match the IP, the second one may. I have found zen.spamhaus.org to catch most of them, and netblockbl.spamgrouper.com to catch some of the ones that went through.

We are chaining 3 rules, and if the 3 match, we will take the actions from the first rule. As a sidenote, when chaining rules, the disruptive actions must appear in the first rule (drop here).

So, our first rule says that we want to match the HTTP method POST. We chain this rule and if all chained rule match, we will drop the request, and log it with a message along the line: Spam host detected by _dnsbl_provider_. The next rule check if the URI is /wp-comments-post.php, which is the page use to post comments and also get chained, finally, the third rule check that the remote IP is known to the DNSRBL.

If all 3 conditions are met, the request is dropped and does not even reach WordPress.

Another good DNSBL that catched IP that made it through the 2 first one is Project Honeypot. You need to sign up and get your BL API key, once you have it, you can add it to the list and fill in the key with the SecHttpBlKey directive as follow:

SecHttpBlKey my_key_here

SecRule REQUEST_METHOD "POST" "id:'400012',chain,drop,log,msg:'Spam host detected by dnsbl.httpbl.org'"

SecRule REQUEST_URI "\/wp-comments-post\.php" chain

SecRule REMOTE_ADDR "@rbl dnsbl.httpbl.org"

Avoiding too many lookup

We can improve the logic above by marking blacklisting an IP that matched against a DNSBL by blacklisting them from within ModSecurity for some time. This way, if we see an IP that we know is a spammer, we will avoid querying the DNS for no reason.

What we will do here, is to blacklist an IP for 7 days if we already had a match against a DNSBL. We will only do that for POST to the comment page (but we could be more aggressive and just blacklist against any pages.).

Change the rules to look like:

<IfModule security2_module>

SecRuleEngine On

SecRequestBodyAccess Off

SecAction "id:400000,phase:1,initcol:IP=%{REMOTE_ADDR},pass,nolog"

SecRule IP:spam "@gt 0" "id:400001,phase:1,chain,drop,msg:'Spam host %{REMOTE_ADDR} already blacklisted'"

SecRule REQUEST_METHOD "POST" chain

SecRule REQUEST_URI "\/wp-comments-post\.php"

SecRule REQUEST_METHOD "POST" "id:'400010',chain,drop,log,msg:'Spam host detected by zen.spamhaus.org'"

SecRule REQUEST_URI "\/wp-comments-post\.php" chain

SecRule REMOTE_ADDR "@rbl zen.spamhaus.org" "setvar:IP.spam=1,expirevar:IP.spam=604800"

SecRule REQUEST_METHOD "POST" "id:'400011',chain,drop,log,msg:'Spam host detected by netblockbl.spamgrouper.com'"

SecRule REQUEST_URI "\/wp-comments-post\.php" chain

SecRule REMOTE_ADDR "@rbl netblockbl.spamgrouper.com" "setvar:IP.spam=1,expirevar:IP.spam=604800"

SecHttpBlKey my_key_here

SecRule REQUEST_METHOD "POST" "id:'400012',chain,drop,log,msg:'Spam host detected by dnsbl.httpbl.org'"

SecRule REQUEST_URI "\/wp-comments-post\.php" chain

SecRule REMOTE_ADDR "@rbl dnsbl.httpbl.org" "setvar:IP.spam=1,expirevar:IP.spam=604800"

</IfModule>

What is new? First, we will initialise a collection called IP, then, if the value of IP.spam is greater than 0, we will chain the rule, and drop and log the message ‘Spam host %{REMOTE_ADDR} already blacklisted’ if all other chained rule match. In the chained rules, we check that the query is POSTing to the comment page.

Now, when we detect a spam with a DNSBL, we set the variable IP.spam to 1 and make that variable expire after 7 days (604800 seconds).

Conclusion

Using this technique, I have brought the number of comments marked as spam by Akismet down by 50x!!!. I can now let spams stack up in the spam comment list and wordpress can still delete them quickly when clicking on Empty Spam.

Not only does it make the experience more enjoyable, but by tackling the spams earlier, you save some processing resources, avoid useless writes to DB and query to Akismet along with some bandwith as the 403 page is much more lightweight then a render blog page.

By keeping track of known spamming IP, we can also bypass DNS queries.

How-To: Fight SPAM with Postfix RBL 25 Sep 2013 11:02 PM (12 years ago)

Spam, spam everywhere! If you are hosting your own mail server, fighting spam can become tricky. Antispam solutions do catch a fair amount of them, but still many spam email can still make their way through.

RBL (Real-time Blackhole) is a database of known spammy IPs which is accessible over DNS. Depending on the response received from the DNS server, the IP is classified as spammy or not.

This tutorial will show you how to set up RBL with postfix.

We are not going to cover how to install and set up postfix here. If you do not have a working postfix setup yet, you could check How-To: Virtual Emails Accounts With Postfix And Dovecot.

Now that you have a working postfix setup, let’s talk at what we want to achieve…. Basically, the idea behind RBL is that we want to reject emails upon reception if they are coming from an IP which is known to be spammy.</br >In Postfix, this basically happens in the smtpd_client_restrictions config entry which resides in /etc/postfix/main.cf .

There, we will add a few reject_rbl_client entries that will take care of rejecting a client connection if it is blacklisted by one of the service.

smtpd_client_restrictions = permit_mynetworks,

permit_sasl_authenticated,

reject_unauth_destination,

reject_rbl_client zen.spamhaus.org,

reject_rbl_client bl.spamcop.net,

reject_rbl_client cbl.abuseat.org,

permit

And finally, restart you mail server to take the new settings into account:

/etc/init.d/postfix restart

Let’s go over the settings. In this case, I have chosen to use zen.spamhaus.org first, bl.spamcop.net second and finally cbl.abuseat.org.

Essentially, what is going to happen given the set of rules below, is that email sent from my network will be accepted with no checks. Same goes to authenticated users (e.g email account/password). If the email is neither sent by my network or by an email account, we reject all email which destination is not known to m, e.g no relaying (reject_unauth_destination). At that stage, the email that have not matched any of the previous rules are basically email sent to one hosted mail domain, this is where we are going to apply the RBL filter and reject emails coming from blacklisted clients.

If it passes all 3 RBL filters, the email will be allowed to go through…. and go through the next steps (antispam, antivirus…)

Using RBL is really efficient and pretty lightweight. All it take is some DNS queries and if you were going to receive a lot of spam email from the same client, this DNS entries will be cached in your (local) DNS. To get some figures on how many emails get caught through RBL, on a server that 90% of the email rejected, 98% of them are from RBL, the rest is relay being denied!

You can find check this list of DNS Blacklist services.

Enjoy your (almost) spam free email experience!

How-To: using Python Virtual Environments 2 Sep 2013 10:30 PM (12 years ago)

A nice thing about Python is that there is tons of modules available out there. Not all those modules are readily available for your distro and even if there were, chances are that a newer release with new features is already out there.

You might not always want to install those modules system wide, either because there might not be any need for it, or because they could clash with the same module install via package management.

To answer this problem, python has a virtualenv that will let you create multiple virtual python instances within which you will be able to install whichever modules you might need. All this without requiring root pribileges.

First, let’s install the main package for the job: virtualenv:

$ sudo apt-get install python-virtualenv

Now we can get ready and start installing our first virtualenv environment called venv

$ virtualenv ~/venv

New python executable in venv/bin/python

Installing distribute.............................................................................................................................................................................................done.

Installing pip...............done.

In order to get into that virtual env, we have to run the following command:

$ source ~/venv/bin/activate

(venv) $

At this stage, we are confined in this newly created python environment and we can start to install some packages in it. Let’s install django-1.4:

pip install django==1.4

...

(venv) $ python -c "import django; print django.get_version()"

1.4

Now, in order to get out of this virtual environment, run:

(venv) $ deactivate

$

If you want to force the version of python to be used, you have to explicitly say it when creating the new environment. Suppose we have python3.2 binary available at /usr/bin/python3.2, you can create a virtual environment that will use it by using the following command line:

$ virtualenv -p /usr/bin/python3.2 ~/venvpy3

$ source ~/venvpy3/bin/activate

(venvpy3) $ python -V

Python 3.2.3

(venvpy3) $ deactivate

$

That’s about all it takes to have an isolated python environment where you can easily pip install anything you like without messing with the system.

A separate utility virtualenvwrapper is available to ease the management of virtual envs, worth checking it out!

How-To: Automatically logout idle bash session 14 Jul 2013 11:08 PM (12 years ago)

It can be useful to have a bash session automatically closing after some time. One of the obvious reason you might want this to happen is to make sure that no console is left with root access unwillingly.

Bash comes ready for this and can be configured to automatically terminate after waiting for activity for a given time.

By default, bash will wait indefinitely for input. But, there is a way to tell bash, or more exactly the read builtin command to timeout after some time of inactivity. This has the effect, for an interactive shell, to terminate after waiting for that time.

TMOUT is the environment variable that define that timeout.

In order to have this applied to ALL users on your system, you can create a file called: /etc/profile.d/bash_autologout.sh and add the following to it:

export TMOUT=600

The next time a user will log in, they will set and export TMOUT and their shell will terminate after 10 minutes of inactivity.

How-To: WiFi roaming with wpa-supplicant 17 Jun 2013 10:14 PM (12 years ago)

wpa_supplicant can be used as a roaming daemon so you can get your system to automatically connect to different network as you are going from one location to another.

This come in pretty handy on headless machines where you rely on network connection to be up in order to be able to access the machine.

First, you need to make sure that you have wpasupplicant installed:

apt-get install wpasupplicant

Once wpasupplicant we can go ahead and configure different networks. Go and open /etc/wpa_supplicant/wpa_supplicant.conf:

ctrl_interface=DIR=/var/run/wpa_supplicant GROUP=netdev

update_config=1

# WPA-Personal (PSK)

network={

ssid="home"

scan_ssid=1

key_mgmt=WPA-PSK

psk="home_psk"

id_str="home"

}

# work network; use EAP-TLS with WPA; allow only CCMP and TKIP ciphers

network={

ssid="work"

scan_ssid=1

key_mgmt=WPA-EAP

pairwise=CCMP TKIP

group=CCMP TKIP

eap=TLS

identity="user@example.com"

ca_cert="/etc/cert/ca.pem"

client_cert="/etc/cert/user.pem"

private_key="/etc/cert/user.prv"

private_key_passwd="password"

id_str="work"

}

# non encrypted network

network={

ssid="unsecure"

scan_ssid=1

key_mgmt=NONE

id_str="unsecure"

}

Now that we have our different SSIDs set up, we have to configure /etc/network/interfaces to use wpa-supplicant in roaming mode.

To do that, we you need to make sure wlan0 (or whichever interface name your WiFi interface maps to) is set up as follow:

allow-hotplug wlan0

iface wlan0 inet manual

wpa-roam /etc/wpa_supplicant/wpa_supplicant.conf

iface default inet dhcp

With this setting, wpa-supplicant will establish a link with the access-point. Then, the OS will set up the network with DHCP.

As you might have seen, we have defined id_str for each network in /etc/wpa_supplicant/wpa_supplicant.conf . We can use those IDs to set up custom network settings depending on which access point we are connected to.

In the settings below, we will configure a static ip for home and work and we will default to DHCP for the rest.

allow-hotplug wlan0

iface wlan0 inet manual

wpa-roam /etc/wpa_supplicant/wpa_supplicant.conf

# Leave this in to default to dhcp

iface default inet dhcp

# At home, we want to have a static IP 192.168.1.2/24 with default gw 192.168.1.1

iface home inet static

address 192.168.1.2

network 255.255.255.0

gateway 192.168.1.1

# At work, we want static IP 10.0.0.10/24 with default gw 10.0.0.1

iface work inet static

address 10.0.0.20

network 255.255.255.0

gateway 10.0.0.1

That should be it, you can run the following as root:

ifdown wlan0; ifup wlan0

And the interface should be coming up if oyu properly set up all your settings.

More info on setting wpa_supplicant.conf can be checked through

man wpa_supplicant.conf

How-To: Make a file Immutable/Write protected 9 Jun 2013 10:35 PM (12 years ago)

There might be time when you want to make sure that a file will be protected from accidental/automated change/deletion. While one can protect a file/directory in some ways by removing write permissions using standard file permission on Unix already can save you from some situations, there is more that can be done on Linux.

The e2fsprogs software suite comes with a bunch of file system utilities for the ext* filesystems. Amongst them, there is the chattr that will help us change attributes on a Linux File system.

While there is numerous attributes that can be changed, for the purpose of this post, we will look at the attribute that would make our file/directory immutable, even by root and whichever are the Unix filesystem permissions.

The attribute that we will modify is i as in immutable.

Making a file/directory immutable

To make a file or directory immutable, we will be using the following command (considering that the file we modify is called foo):

# chattr +i foo

Let’s play with 1 file and see how things go:

# ls -l foo

-rwxrwxrwx 1 user user 4 Jun 9 22:30 foo

# echo "foo" >> foo

# chattr +i foo

# echo "foo" >> foo

-su: foo: Permission denied

# rm foo

rm: cannot remove `foo': Operation not permitted

Removing immutable attribute from a file/directory

To remove that attribute, we need to use the -i version of the command:

# chattr -i foo

Now that we have remove the attribute, we can modify/remove the file:

# echo "foo" >> foo

# rm foo

Checking file attributes

lsattr command can be used to verify what attributes are set on a file/directory:

$ lsattr foo

----i--------e-- foo

There is more attributes available. To find more about it, refer to:

$ man chattr

Do mind that some attributes are not enabled on mainline Linux kernels.

Debian 7.0 Wheezy released 4 May 2013 8:30 PM (12 years ago)

Debian 7.0, code name Wheezy, is finally released.

This new release of comes with some interesting new features such as multiarch support and tools to deploy private cloud based on OpenStack and Xen Cloud Platform (XCP).

For the first time, Debian supports booting using UEFI.

Debian 7.0 Wheezy will be running on Linux 3.2. the LAMP stack will be running off Apache 2.2.22, PHP 5.4.4 and MySQL 5.5.30.

On the graphical interface side, Gnome 3.4, XFCE 4.8 and KDE 4.8.4 will run your desktop. Iceweasel 10 and Icedove 10 will be your door to the internet.

On the virtualization side, Xen Hypervisor 4.1.4 will be running your virtual machines.

And much much more……

It is now time to get your Debian stable upgraded to Debian Wheezy and start playing with the new Debian Testing: Debian Jessie!.

For more information regarding this release, the official release note can be found on the Debian 7.0 Wheezy released announcement.

How-To: tail multiple files with multitail 28 Apr 2013 9:13 PM (12 years ago)

Many times you will end up tailing multiple files simultaneously. There is a sweet linux utility called multitail that will let you tail multiple files at the same time within the same shell.

And not only will you be able to tail multiple files! You will also be able to run multiple commands and tail their outputs!

multitail allows you to tail multiple files within the same window. Outputs can be either merged together or shown in split panes either horizontally or vertically.

Installation

On Debian, installation is pretty straightforward and only needs a bit of _apt-get_ing:

# apt-get install multitail

Usage

There is a tons of ways to use that command. The man page is filled with different switches that can be use to better customize the output.

I am going to show you a few ways to use it.

First, let’s aggregate the output of 2 log files within the same window. This is done by using a command like:

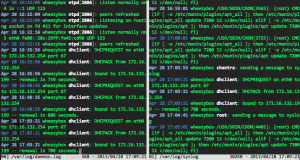

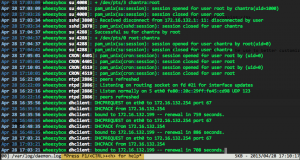

# multitail -i /var/log/daemon.log -I /var/log/auth.log

This tells multitail to tail files /var/log/daemon.log and /var/log/auth.log and to merge the output of /var/log/auth.log into the first window.

You can also have the same files’ output in 2 different panes:

You can also have the same files’ output in 2 different panes:

# multitail -i /var/log/daemon.log -i /var/log/syslog

This will have the 2 files displayed in 2 different panes split across the horizontal axis.

# multitail -s 2 -i /var/log/daemon.log -i /var/log/syslog

will have the same files split across the vertical axis.

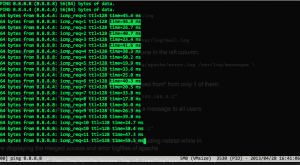

As said earlier, multitail can also tail the output of commands. In a nutshell, to do this, you will need to use -l in place of -i and -L instead of -I.

$ multitail -l "ping 8.8.4.4" -ec 'time=4[0-9]\.?[0-9]* ms' -L "ping 8.8.8.8"

Will ping 8.8.4.4 and ping 8.8.8.8 simultaneously and merge the outputs within the same window. On top of that, we will highlight the chunks of output that match time=4[0-9].?[0-9]* ms, e.g any ping’s which RTT is between 40 and 50ms.

There is much more possibilities offered by multitail. As always, the manpage is the source of truth. The developer has gathered a few example on his example page.

There is much more possibilities offered by multitail. As always, the manpage is the source of truth. The developer has gathered a few example on his example page.

How-To: Reboot on OOM 16 Apr 2013 11:57 PM (12 years ago)

Ever had your linux box getting Out of Memory (OOM)? Cleaning up after the OOM killer kicked in to find out that even though OOM killer did a decent job at trying to kill the bad processes, your system ends up in an unknown state and you might be better of rebooting the host to make sure it comes back up in a state that you know is safe.

This is in no way a solution to your OOM problem and you will most likely need to troubleshoot further the cause of the OOM, nonetheless, this will make sure that when the issue happens (in the middle of the night?), your system gets back on track as fast as possible.

This is in no way a solution to your OOM problem and you will most likely need to troubleshoot further the cause of the OOM, nonetheless, this will make sure that when the issue happens (in the middle of the night?), your system gets back on track as fast as possible.

If you use this solution, you really need to make sure that when your system reboots, it is actually functional!

Warnings been said, let see how we can make our system reboot when the OOM killer gets in town.

Well, the trick is actually to panic the kernel on OOM and to tell the system to reboot on kernel panic.

This is done via procfs. You could either echo the values in /proc/sys/…, but this will not be kept upon reboot, OR set the values in sysctl file and activate them. I will go for the later solution.

Create a file called /etc/sysctl.d/oom_reboot.conf and enter the lines below:

# panic kernel on OOM

vm.panic_on_oom=1

# reboot after 10 sec on panic

kernel.panic=10

Then, activate the settings with:

# sysctl -p /etc/sysctl.d/oom_reboot.conf

vm.panic_on_oom = 1

kernel.panic = 10

And you should be good to go. You can tweak the number of seconds you want to wait before the system reboot to you need by replacing 10 with whatever value suits you.

If OOM killer was going to kick in again, you would get the same screen than the screenshot above.

If you want to confirm this works, you can run the program below on a test host (a VM on your workstation is a good candidate):

/**

oom.c

allocate chunks of 10MB of memory indefinitely until you run out of memory

*/

#include <stdlib.h>

#include <stdio.h>

#include <string.h>

#define TEN_MB 10 * 1024 * 1024

int main(int argc, char **argv){

int c = 0;

while (1){

char *b = malloc(TEN_MB);

memset(b, TEN_MB, 0);

printf("Allocated %d MB\n", (++c * 10));

}

return 0;

}

Then compile with:

$ gcc -o oom oom.c

And run it:

$ ./oom

Allocated 10 MB

Allocated 20 MB

...

...